In January I had the worst week of my life. My wife and I joked we wanted to start 2016 in February. Within a single week I lost my job and we had a miscarriage. In this post I want to tell you about this traumatic experience, how this massive change has turned out positive and what I learned from it.

Before

I started this year with all the optimism in the world. Throughout the holidays leading up to 2016, I tried to refocus myself. The year started with new habits and influences which improved my outlook on life. I was determined to be more positive and work hard at doing a great job.

This was a stark contrast to the previous several months which were filled with ups and downs.

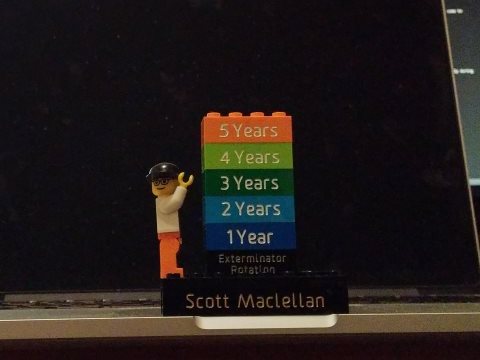

My time with the Exterminators was a blast. I felt re-energized and excited about software development again. While with the team I found new growth areas and doubled down on existing skills. This left me wanting more.

Returning to my previous team was jarring. When I left I was excited about a new project which would have a huge impact. Incorrectly, I thought I would get to dive into it right away. After I had started prototyping portions of it, the whole project was given to another team.

Instead, I was relegated to ongoing maintenance on a different project. My learning slowed. Shortly afterwards our team split in two. Another project I was excited about went with the other half of the team. I felt like I had been punted to the B team. My heart was not in my work. Focus and quality suffered.

In the months leading up to January I was contemplating a larger change. The essential question was whether I could still have an impact in my job or company. Getting things done was frustrating and I did not deal with this well. Over time my morale eroded further as I considered greener pastures elsewhere.

However, during a company-wide developer conference and the holidays I started to see life differently. In my role I might have plateaued, but I realized D2L as a whole still has many great things to offer. I worked with phenomenal people like Craig and Daryl whom I could still learn from. D2L operates on a large scale and have different challenges which are exciting. Lastly, I fully believe in D2L’s mission of transforming learning.

In January I hit the ground running. I reset my perspective. I was excited again. Each task I worked on received my complete attention. I was crushing it again. Coming to work each day was fantastic. We had great projects lined up and we were making a difference.

Terminus

The company mentioned there might be some changes come later in the month. In the past our team had been completely immune. After all, our team was doing critical work which could dramatically improve how the company operates. I considered myself an important contributing member of team and in my hubris didn’t think anything of it.

On that fateful January 26th, my manager who seemed a little choked up came and got me at my desk. This was not like him. I felt a pang of fear, then it happened. My time at D2L was finished. I was given the paper work, told the terms and then escorted to a conveniently located exit nearby.

I was absolutely stunned. It was difficult to speak. On my way out I bumped into one of my mentors and could barely tell them what happened. It took everything to stop myself from crying.

We had planned our life out around my job. This was one thing I thought I could depend on. I had worked there for over 5.5 years. In the previous few years we bought a house and had our son, Jude. How were we going to afford everything? Even in our wildest contingencies we were not ready for me to lose my job. The news was overwhelming.

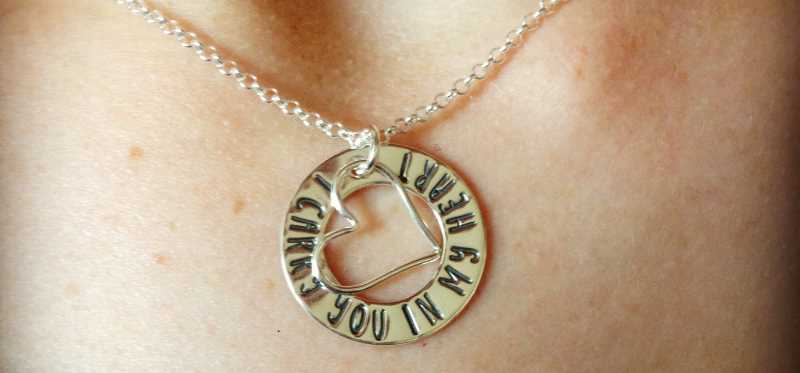

Then the second bombshell went off. My wife was expecting our second child. She was having signs things were not right. The day after I was let go we lost the baby. Even months later, I am immediately brought back to the devastation we felt. All we could do was hold each other and cry. As I write this I am crying again as the memories come flooding back.

This was the worst week of my life.

Doubt

You are your own worst enemy. I spent too much of the next few months rethinking everything. Was there something I did? Something I didn’t do? Why was I chosen? Not knowing why is perhaps the hardest part.

Even now I doubt myself. Have I been a phony for all these years? Am I a horrible programmer or worse? There is an interesting cognitive bias known as the Dunning-Kruger effect where the truly incompetent cannot possibly know how bad they are. They lack the meta-thinking to understand their own faults. In short, you can be so bad you don’t realize it. Is that me?

I talked a little bit about my self-doubts in my post ‘Are you your code review?’. They have always been with me. This event fanned the flames of those doubts even more.

Leading up to the exit interview I wrote lists of things which I thought might have led to this outcome. Talking to my former boss helped clear up my lists. He highlighted areas for improvement and made me feel like I was not a failure.

Getting past my self-doubt and focusing on moving forward was the first step. This easily becomes a dark line of thinking which only leads to self-destruction.

Acceptance

Our family took the first week to recover and get back to normal. There was no changing what had happened. Daily life was permanently altered. We needed to accept this new reality and move forward.

During those first few days the weirdest thing that I needed to accept was my coworkers were suddenly not there. Waking up every morning I was confronted with the fact that I would not be seeing Travis and Josh today. Many of my coworkers had grown into close friends on whom I rely a great deal. I was always happy to see them again or beat them at foosball. Our relationship would need to change if it was to survive.

Another odd thought experiment was the other choices D2L could have made. Was I chosen instead of other people? Would me staying mean someone else leaving? In the end I felt it was better that I was chosen instead of someone else. Better I go through this than one of my friends. After our life had normalized a bit, I was optimistic we would be okay and didn’t want them to have the same burden.

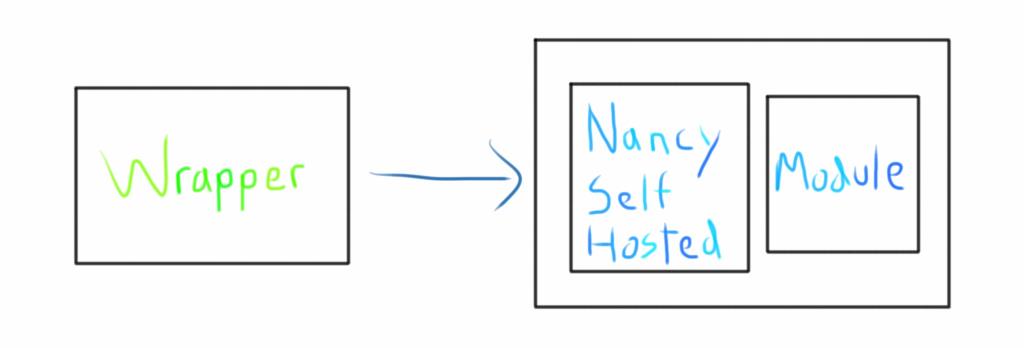

Although being asked to leave was not great, it did open the door for new opportunities. With our last several projects I had been dipping my toes into different technologies and was really enjoying it. Now I had the chance to make a bigger shift in my career.

Searching

Getting a job in the past was a fairly straight forward process:

- Write a resume

- Find jobs

- Write cover letters

- Apply

- Interview

- Repeat or accept the offer

This time around everything happened at the same time and looked like:

- Network, find jobs and apply all at once

- Interview

- Review and accept offers

During this job hunt I learned how invaluable your connections are in finding new jobs. Over the years I had worked with many different people who had moved on from D2L to other companies. I had great role models at D2L who were willing to be references.

Thanks to my network, finding potential jobs changed dramatically. The first step in my search was to talk to my connections. Right away I was introduced to potential companies. I went for coffee to learn about open positions. Casual meetings turned into interviews.

Finding potential jobs in Kitchener-Waterloo is also extremely easy thanks to services through Communitech. They run an active job board which has hundreds of fantastic postings available. I found many interesting companies with a diverse range of sizes and needs.

A number of recruiters reached out with various opportunities. While I did not use their services, they showed me positions typically outside of my existing network. They helped restore my confidence that I would be able to find a new job and move past my doubts.

Resumes and cover letters are a must. Within the first week I had mine updated and was ready to share it with the world. It slowly got better over time with more and more feedback. Luckily, I had updated it sporadically with different achievements and milestones.

Interviews are intimidating. It had been over 5 years since I last interviewed at all. Co-op terms at university gave me a lot of practice, but without using those skills for years I found I was very rusty. My friends helped me through mock interviews to get me ready. Even still, I bombed a few of them which was tough.

Regardless of how an interview went I always sent a follow up email to:

- Thank the interviewer

- Emphasize what went well

- Address any hiccups

- Reiterate why I would be a great fit

Due to my background I am less good at algorithms and get caught up on some standard interview questions. I spent a lot of time practicing interview problems and reading up on algorithms. When that wasn’t enough I tried learning Ruby and refreshing my knowledge of JavaScript frameworks/idioms. After coming home from interviews, I implemented the programming questions I was given to double check my solutions.

Success

Things started slowly then picked up pace. At first I just had coffee with former colleagues/mentors. Interviews started to trickle in and then really accelerated. During the last week I had a flurry of final interviews leading up to a difficult choice.

In the final stages I was fortunate to have several offers from great companies. Each presented different opportunities and challenges. I don’t think I could have gone wrong choosing from any of the options. Other interviews were not successful and I will need to try again another time.

Over the weekend I made my decision. Due to the timing I had another week off to relax and decompress before starting work. The end was in sight after the harrowing month which preceded it.

On March 7th I started working at Vidyard full time! Even before the first day I was blown away by the amazing culture. They were so welcoming and helped me get connected right away. On my second day I pushed code to production! I am thrilled to be part of the team.

Lessons

What may have been the worst week of my life at the time, will now be the start of a bigger change in my life. This entire experience has taught me so much and helped me understand what matters most.

At D2L, I poured a lot of time, effort and self-worth into my job, only to see it come to an end. I was slowly learning work should not be my #1 priority. This experience brought that realization painfully to the forefront.

What really matters is our relationships and loved ones. The quiet moments as a family during the first week will stay with me forever. I cannot thank enough the many friends and family members who supported us through this challenging period. We are so fortunate to have them in our lives.

We needed to accept what had happened to move on. Hopefully some day we will have other children. I found another job. We can learn from our past, but should not dwell on it.

Interviewing is a skill you need to keep practicing. From now on I plan on regularly interviewing and updating my resume so I will not become rusty again. The goal is not to job hop every year. Instead, I want to understand what people are looking for and be able to confidently present myself.

I was due for a change and believe I have found what I needed. While my time at D2L was a great learning experience, I was restless and wanted something else. During several low points while at D2L I had felt like leaving. I was never able to make the choice on my own and now the choice was made for me.

Epilogue

Why write this post now? I am finishing my probation and feeling more comfortable at Vidyard. After the event I was so shaken up I decided to put the blog on hold. Starting a new job I wanted to make sure my first 3 months were solid.

Well, mostly solid. Almost immediately after starting I was sick for a full 3 weeks. In the first month I worked from a bed at home more than I did at the office. Although I was sweating bullets while coughing up lung chunks, my manager was extremely supportive. My coworkers all told me not to worry and how later I could look back and laugh about it. I had their trust to do what I needed to do and the space to get better. The entire experience was yet another testament to why Vidyard is awesome.

Now, I have shipped a few features and am working on something bigger. It has been a blast. I feel like I have learned so much in very little time. Hopefully, in the coming weeks I will be able to share what I have learned with you.

Thanks to my former co-worker Josh for helping me with the grammars. Sorry for turning down your edit about who wins our foosball. Thanks for the many years we spent together at D2L. I miss you canoe buddy.

I would like to thank my lovely wife Angela for helping review this post. I would be lost without you. I love you.